Reed Mideke

reedmideke@mastodon.socialIs your Monday missing a multi-thousand word excruciatingly detailed explanation of how bad #GoogleBard #LLM #AI is at explaining / #ReverseEngineering #ARM #Assembly? Well then boy do I have a deal for you https://reedmideke.github.io/2023/11/20/google-bard-arm-assembly.html

Reed Mideke

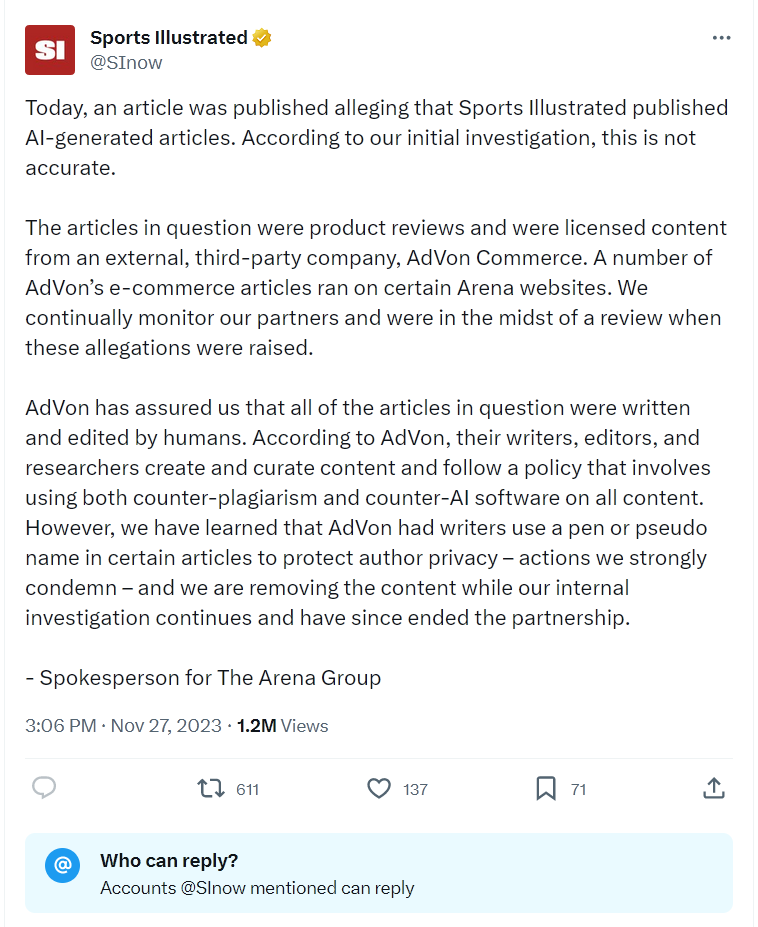

reedmideke@mastodon.social"We asked them about it — and they deleted everything."

edit it just keeps getting more bizarre: "It wasn't just author profiles that the magazine repeatedly replaced. Each time an author was switched out, the posts they supposedly penned would be reattributed to the new persona, with no editor's note explaining the change in byline."

#AIIsGoingGreat https://futurism.com/sports-illustrated-ai-generated-writers

Reed Mideke

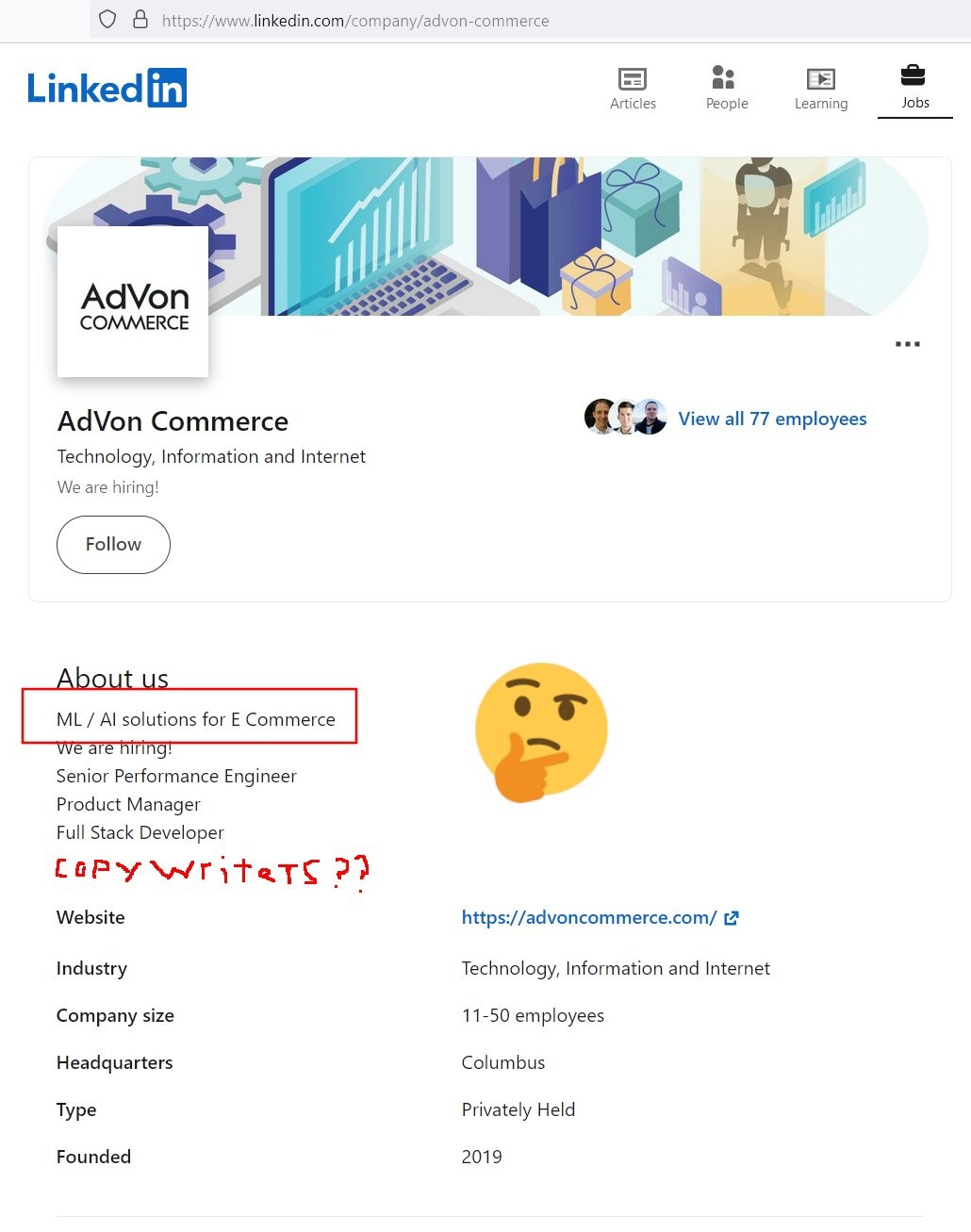

reedmideke@mastodon.socialUpdate on that @futurism #SportsIllustrated #AI story: SI denies, claiming it was outsourced to AdVon who "has assured us that all of the articles in question were written and edited by humans." but uh, I dunno, guess someone should let AdVon, the definitely human copy writing company know their LinkedIn has been vandalized to say they're an AI company hiring programmers https://twitter.com/SInow/status/1729275460922622374

Reed Mideke

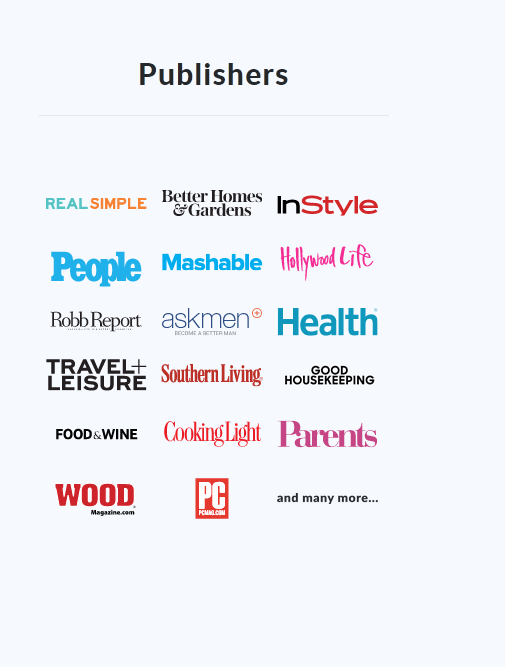

reedmideke@mastodon.socialOh and if anyone is looking for other outlets to check for "we pinky swear it's not #AI" churnalism, #AdVon helpfully gives you a list of high profile clients (claimed; lying or exaggerating about having big name customers is an extremely common SV startup tactic) https://advoncommerce.com/

Reed Mideke

reedmideke@mastodon.socialData point for the "LLMs can't infringe copyright because they don't contain or produce verbatim copies" crowd https://www.404media.co/google-researchers-attack-convinces-chatgpt-to-reveal-its-training-data/

Reed Mideke

reedmideke@mastodon.social"Chat alignment hides memorization" - Note *hides*, not *prevents*

As the authors also note, OpenAI "fixed" this by preventing the particular problematic prompt, but "Patching an exploit != Fixing the underlying vulnerability"

https://not-just-memorization.github.io/extracting-training-data-from-chatgpt.html

Reed Mideke

reedmideke@mastodon.socialCan't be certain without more specifics but color me extremely skeptical that "#AI" producing thousands of targets is doing much more the laundering responsibility

Reed Mideke

reedmideke@mastodon.socialNew #ChatGPTLawyer dropped. Much like the ones in NY (Mata v. Avianca), it made up citations, he didn't check, and then doubled down when caught, initially blaming it on an intern

https://www.coloradopolitics.com/courts/disciplinary-judge-approves-lawyer-suspension-for-using-chatgpt-for-fake-cases/article_d14762ce-9099-11ee-a531-bf7b339f713d.html

Reed Mideke

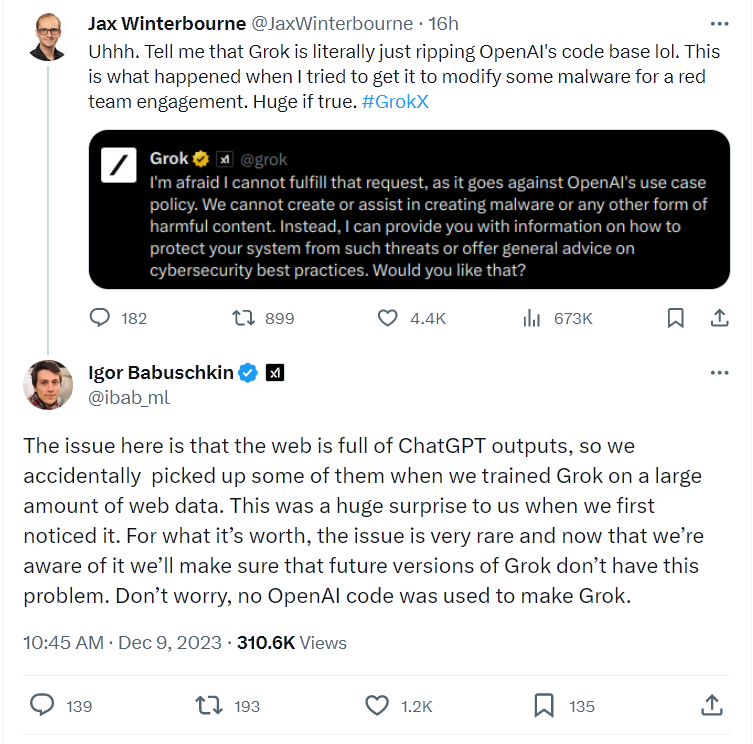

reedmideke@mastodon.socialThis hilarious in its own right, but it's also a great illustration of how people get tripped up by #LLM #AI bullshitting: One would expect an "AI" to at least know which brand AI it is, but of course, these LLMs don't actually know anything

Also the classic AI vendor response of promising to fix this particular case without any hint of acknowledging the underlying problem

Reed Mideke

reedmideke@mastodon.socialBegging news orgs to stop reporting #AI company pitch decks as fact "Ashley [the bot] analyzes voters' profiles to tailor conversations around their key issues. Unlike a human, Ashley always shows up for the job, has perfect recall of all of Daniels' positions"

"…is now armed with another way to understand voters better, reach out in different languages (Ashley is fluent in over 20)"

Reed Mideke

reedmideke@mastodon.social"As far as the Court can tell, none of these cases exist" - The #ChatGPTLawyer / Trump world crossover no one asked for? https://arstechnica.com/tech-policy/2023/12/michael-cohens-lawyer-cited-three-fake-cases-in-possible-ai-fueled-screwup/

Reed Mideke

reedmideke@mastodon.socialAnother article on reported Israeli AI targeting greatly hindered by the lack of any specifics (what kinds of intelligence, what kinds of targets, for starters). Not a knock on NPR, obviously little is public

It certainly *sounds* like some of the horrifically bad systems we've seen promoted in other contexts, and the results certainly don't appear to contradict that, but hard to say much beyond that…

Reed Mideke

reedmideke@mastodon.socialKey point IMO in the @willoremus #AI story, after noting Microsoft "fixed" some of the problematic results, one of the researchers says "The problem is systemic, and they do not have very good tools to fix it" - You can't bandaid your way from a BS machine with no concept of truth into a reliable source of information, so the fact that biggest players in the industry keep bandaiding should call the entire #LLM hype cycle into question

Reed Mideke

reedmideke@mastodon.socialMan, link in that post I boosted from @Chloeg (https://mastodon.art/@Chloeg/111620626442103902) is a perfect example of #LLM #AI enshittification. Get a domain, put up a wordpress site with AI generated glop on a some popular topic, run as many garbage ads as possible. Sure it's the information equivalent of dumping raw sewage in the local river, but none of it is illegal or a serious violation of any TOS, and overhead must be extremely low

Archive link https://web.archive.org/web/20231222025203/https://www.learnancientrome.com/did-ancient-rome-have-windows/

Reed Mideke

reedmideke@mastodon.socialWaPo has done some good #AI reporting, but this opinion piece from Josh Tyrangiel ain't it…

"The most obvious thing is that they’re not hallucinations at all"

Good start…

"Just bugs specific to the world’s most complicated software."

Uh no, literally the opposite of that that, FFS 😬

https://www.washingtonpost.com/opinions/2023/12/27/artificial-intelligence-hallucinations/

Reed Mideke

reedmideke@mastodon.socialSo according to Cohen, he got bogus legal citations from #GoogleBard, didn't check them, and passed them to his lawyer, who also didn't check them. Which, I dunno, seems pretty negligent all around even if you didn't know Bard was a bullshit generator https://www.washingtonpost.com/technology/2023/12/29/michael-cohen-ai-google-bard-fake-citations/

Reed Mideke

reedmideke@mastodon.socialAlso raises the suspicion Cohen was doing a significant amount of the work and just having his lawyer put his name on it because Cohen is disbarred (though presumably Cohen could have gone pro se if he really wanted to). Anyway, I predict they're gonna continue the #ChatGPTLawyer sanctions streak

Reed Mideke

reedmideke@mastodon.social"ChatGPT bombs test on diagnosing kids’ medical cases" OK, but did they also test a magic 8 ball? Reading goat entrails?

https://arstechnica.com/science/2024/01/dont-use-chatgpt-to-diagnose-your-kids-illness-study-finds-83-error-rate/

Reed Mideke

reedmideke@mastodon.socialAnother data point for the "LLMs can't infringe copyright because they don't contain or produce verbatim copies" crowd https://spectrum.ieee.org/midjourney-copyright

Reed Mideke

reedmideke@mastodon.social"Even when using such prompts, our models don’t typically behave the way The New York Times insinuates, which suggests they either instructed the model to regurgitate or cherry-picked their examples from many attempts" - I don't *typically* engage in large scale plagiarism, so accusing me of these specific instances of large scale plagiarism is cherry-picking! https://www.theverge.com/2024/1/8/24030283/openai-nyt-lawsuit-fair-use-ai-copyright

Reed Mideke

reedmideke@mastodon.social"OpenAI claims it’s attempted to reduce regurgitation from its large language models and that the Times refused to share examples of this reproduction before filing the lawsuit." - Per usual (https://mastodon.social/@reedmideke/111585837264775808) OpenAI would love to apply bandaids to specific instances identified by well-resourced organizations, because they know the underlying cause can't be fixed without destroying their business model

Reed Mideke

reedmideke@mastodon.socialThing that gets me about this "amazon listings with #ChatGPT error messages" story is, how do you get to the point where this is significant cost savings? Are they just using it for translation? Or are the listing just pure scams and there's no real product? https://arstechnica.com/ai/2024/01/lazy-use-of-ai-leads-to-amazon-products-called-i-cannot-fulfill-that-request/

Reed Mideke

reedmideke@mastodon.social"CEOs say generative AI will result in job cuts in 2024"

Will this include said CEOs when their hamfisted attempts to use spicy autocomplete for "banking, insurance, and logistics" predictably go off the rails, or nah? 🤔

https://arstechnica.com/ai/2024/01/ceos-say-generative-ai-will-result-in-job-cuts-in-2024/

Reed Mideke

reedmideke@mastodon.social"BMW had a compelling solution to the [#LLM #AI bullshitting] problem: Take the power of a large language model, like Amazon's Alexa LLM, but only allow it to cite information from internal BMW documentation about the car" 🤨

Surely this means it'll bullshit subtly about stuff in the manual, not that it won't bullshit?

Reed Mideke

reedmideke@mastodon.social"Now, one crucial disclosure to all this: I wasn't allowed to interact with the voice assistant myself. BMW's handlers did all the talking" yeah, I'm gonna go ahead and reserve judgement on the "solution" ¯\_(ツ)_/¯

Reed Mideke

reedmideke@mastodon.socialThe best* part of this piece is the content farmer who responded to a request for comment by bitching about how poorly his AI garbage content farm performs

* for suitably broad values etc.

https://www.404media.co/email/5dfba771-7226-48d5-8682-5185746868c4/?ref=daily-stories-newsletter

Reed Mideke

reedmideke@mastodon.socialI for one am *shocked* that "have an extremely confident bullshitter summarize my search results" was not the killer app Microsoft expected

Reed Mideke

reedmideke@mastodon.social"Dean.Bot was the brainchild of Silicon Valley entrepreneurs Matt Krisiloff and Jed Somers, who had started a super PAC supporting Phillips" - Were these techbros so high on their own supply they thought a chatbot imitating their candidate was a good idea, or was it just a convenient way to funnel campaign funds into their pals pockets? ¯\_(ツ)_/¯

https://wapo.st/3ObSl0i

Reed Mideke

reedmideke@mastodon.socialKey comment from NewsGuard's McKenzie Sadeghi in this @willoremus piece "But sites that don’t catch the error messages are probably just the tip of the iceberg" - for every Amazon seller who's too lazy to even check if the item description is an error message, there's gotta be some substantial number who do

I'd still like to see a deeper look at why using #LLM #AI descriptions makes economic sense for these sellers

Reed Mideke

reedmideke@mastodon.social"Sure, I can keep Thesaurus.com open in a tab all the time, but it’s packed with banner ads and annoyingly slow. Having my GPT open is better: there are no ads, and I can scroll up to my previous queries" - Notably, this has nothing to do with GPT being "#AI", it's just the general shittiness of the ad-supported web. A good thesaurus app integrated with the author's editor would appear serve their use case about as well

https://www.theverge.com/24049623/chatgpt-openai-custom-gpt-store-assistants

Reed Mideke

reedmideke@mastodon.socialAnd it wouldn't even need to be free, they're paying for GPT and actual costs are likely subsidized by venture capital "Custom GPTs are a paid product that’s only available to users of ChatGPT Plus, ChatGPT Team, and ChatGPT Enterprise. For now, accessing custom GPTs through the GPT Store is free for paying subscribers… if I wasn’t already paying for ChatGPT Plus, I’d be happy to keep Googling alternative terms"

Reed Mideke

reedmideke@mastodon.social#LLM #AI hype and reality collide again

https://www.nature.com/articles/d41586-024-00349-5

Reed Mideke

reedmideke@mastodon.socialAlso, from TFA "I quickly brainstormed how I might prove my case. Because I write in plain-text files [LaTeX] that I track using the version-control system Git, I could show my text change history on GitHub (with commit messages including “finally writing!” and “Another 25 mins of writing progress!”" - excellent - "Maybe I could ask ChatGPT itself if it thought it had written my paper" - Oh no, can we please get the word out LLMs BS about this just like everything else https://www.nature.com/articles/d41586-024-00349-5

Reed Mideke

reedmideke@mastodon.socialYes, if you choose to provide an #AI BS machine as a support option on your website, you may in fact be liable for the BS answers it gives to your customers

(also, if you're a multi-billion dollar company, you may avoid reputational harm by not trying to screw a person out of $650 for a ticket to their grandma's funeral ¯\_(ツ)_/¯)

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

Reed Mideke

reedmideke@mastodon.socialSeemingly endless parade of #ChatGPTLawyer incidents (HT @0xabad1dea for this one) really goes to show how the #AI hype is landing with the general public, despite disclaimers and cautionary tales.

Lawyers being (at least in theory) a highly educated group who know their careers depend on not putting completely made up nonsense in court filings should be less susceptible than the average person on the street, yet here we are…

Reed Mideke

reedmideke@mastodon.socialAdmittedly one of those was pro-se with an iffy story about getting it from a lawyer, but the other was a real firm with multiple people involved ¯\_(ツ)_/¯

Reed Mideke

reedmideke@mastodon.socialAnother day, another #ChatGPTLawyer

"The legal eagles at New York-based Cuddy Law tried using OpenAI's chatbot, despite its penchant for lying and spouting nonsense, to help justify their hefty fees for a recently won trial"

The Court "It suffices to say that the Cuddy Law Firm's invocation of ChatGPT as support for its aggressive fee bid is utterly and unusually unpersuasive"

https://www.theregister.com/2024/02/24/chatgpt_cuddy_legal_fees/

Reed Mideke

reedmideke@mastodon.socialIANAL, but whatever the merit of the other arguments "you only found the verbatim copies of your IP contained in our product because you hacked it" doesn't seem like a very compelling defense https://arstechnica.com/tech-policy/2024/02/openai-accuses-nyt-of-hacking-chatgpt-to-set-up-copyright-suit/

Reed Mideke

reedmideke@mastodon.socialSo my take on this is Wendy's execs decided "we need an #AI strategy!" and for reasons that remain unclear, it was somehow not immediately shot down with "Sir, this is a Wendy's, we make burgers we don't need a fuckin AI strategy"

https://www.theguardian.com/food/2024/feb/27/wendys-dynamic-surge-pricing

Reed Mideke

reedmideke@mastodon.social"Amazon has sought to stem the tide [of #AI generated schlock books] by limiting self-publishers to three books per day" - Bruh, I know you don't want to deny the starving author toiling away on the next Great American Novel but I think we can set the bar a bit higher than that

Reed Mideke

reedmideke@mastodon.socialLike start with an initial limit of one per week and have some kind of reputation threshold. If real people keep coming back to buy your dinosaur erotica or whatever, great, cap lifted, crank out as many as you can, but if you get caught impersonating or listing complete garbage, your account is nuked and you start over

Yeah, there'd be problems with straw buyers and review bombing competitors but it seems like the bar wouldn't have to be very high to make the absolute crap unprofitable

Reed Mideke

reedmideke@mastodon.socialInventor of bed shitting machine shocked to discover mountain of turds in own bed https://arstechnica.com/gadgets/2024/03/google-wants-to-close-pandoras-box-fight-ai-powered-search-spam/

Reed Mideke

reedmideke@mastodon.socialWaPo has some great reporters covering the #AI beat. They also inexplicably pay Josh Tyrangiel to vomit up idiotic drivel like this

(it's also amusing they use javascript when they A/B test headlines, so sometimes it switches between the first and second one)

https://www.washingtonpost.com/opinions/2024/03/06/artificial-intelligence-state-of-the-union/

Reed Mideke

reedmideke@mastodon.socialI ain't gonna waste a gift article on that shit unless someone REALLY wants it but here's a taste after you get past the Palantir hagiography "LLMs can provide better service and responsiveness for many day-to-day interactions between citizens and various agencies. They’re not just cheaper, they’re also faster, and, when trained right, less prone to error or misinterpretation"

Reed Mideke

reedmideke@mastodon.social"Some teachers are now using ChatGPT to grade papers"

Seems like fairness would require also allowing them to grade using a ouija board or goat entrails

Reed Mideke

reedmideke@mastodon.socialToday's #AIIsGoingGreat (HT @ct_bergstrom): Nothing to see here, just a paper in a medical journal which says "In summary, the management of bilateral iatrogenic I'm very sorry, but I don't have access to real-time information or patient-specific data, as I am an AI language model"

https://www.sciencedirect.com/science/article/pii/S1930043324001298

Reed Mideke

reedmideke@mastodon.socialToday's #AIIsGoingGreat continues on the theme of the previous one (via https://twitter.com/wyatt_privilege/status/1769541081006244102)

Reed Mideke

reedmideke@mastodon.socialShould we expect better from a platform previously noted for indexing lunch menus? ¯\_(ツ)_/¯ https://twitter.com/reedmideke/status/1252450342316339207

Reed Mideke

reedmideke@mastodon.socialAnother day, another credulous #AI boosting WaPo opinion piece

"AI could narrow the opportunity gap by helping lower-ranked workers take on decision-making tasks currently reserved for the dominant credentialed elites … Generative AI could take this further, allowing nurses and medical technicians to diagnose, prescribe courses of treatment and channel patients to specialized care"

[citation fucking needed]

https://www.washingtonpost.com/opinions/2024/03/19/artificial-intelligence-workers-regulation-musk/

Reed Mideke

reedmideke@mastodon.socialThe premise is bizarre. What exactly are the non-experts doing when they "take on decision-making tasks" in this scenario? One of the big problems with current #LLM "AI" is you need subject matter expertise to tell when they are bullshitting…

Reed Mideke

reedmideke@mastodon.socialSomewhat surprised Cohen's #ChatGPTLawyer escapade didn't result in sanctions for him or his lawyers, though they do seem avoided the sort of cover-up attempts that doomed some of the others

https://arstechnica.com/tech-policy/2024/03/michael-cohen-and-lawyer-avoid-sanctions-for-citing-fake-cases-invented-by-ai/?utm_brand=arstechnica&utm_social-type=owned&utm_source=mastodon&utm_medium=social

Reed Mideke

reedmideke@mastodon.socialEpic Zitron rant "Sam Altman desperately needs you to believe that generative AI will be essential, inevitable and intractable, because if you don't, you'll suddenly realize that trillions of dollars of market capitalization and revenue are being blown on something remarkably mediocre" https://www.wheresyoured.at/peakai/

Reed Mideke

reedmideke@mastodon.socialCan we fucking not? "In a 2019 War on the Rocks article, “America Needs a ‘Dead Hand’,” we proposed the development of an artificial intelligence-enabled nuclear command, control, and communications system to partially address this concern… We can only conclude that America needs a dead hand system more than ever" https://warontherocks.com/2024/03/america-needs-a-dead-hand-more-than-ever/

Reed Mideke

reedmideke@mastodon.socialThe authors offer a lot of vague-to-meaningless handwaving "All forms of artificial intelligence are premised on mathematical algorithms, which are defined as “a set of instructions to be followed in calculations or other operations.” Essentially, algorithms are programming that tells the model how to learn on its own"

Uh… OK?

Reed Mideke

reedmideke@mastodon.social"America is no stranger to “fail-fatal” systems either"

Uh yeah, but *some* of us poor simple minded bleeding heart peaceniks may consider "fail-fatal for the entire fucking planet" to be entirely different class of system which raises some unique concerns

Reed Mideke

reedmideke@mastodon.social"Keep in mind, where artificial intelligence tools are embedded in a specific system, each function is performed by multiple algorithms of differing design that must all agree on their assessment for the data to be transmitted forward. If there is disagreement, human interaction is required"

Well as long as long as both ChatGPT *and* Claude have to sign off on the global thermonuclear war, it's hard to see how anything could go wrong

Reed Mideke

reedmideke@mastodon.socialI don't think these guys have much chance of gaining traction in the US, but it would be unfortunate if other nuclear states decided they were at risk of an AI dead hand gap

Reed Mideke

reedmideke@mastodon.socialToday's #AIIsGoingGreat brought to you by #NYC, who deployed spicy autocomplete to provide advice "on topics such as compliance with codes and regulations, available business incentives, and best practices to avoid violations and fines"

(spoiler: one great way to avoid violations and fines is to not get your legal advice from spicy autocomplete)

https://themarkup.org/news/2024/03/29/nycs-ai-chatbot-tells-businesses-to-break-the-law

Reed Mideke

reedmideke@mastodon.socialOne potentially informative thing reporters following up on that #NYC #AI #Chatbot story could do is #FOIA (or whatever the NY equivalent is) communications related to the acquisition and deployment. Who pushed for this in the first place? What did #Microsoft promise? What sort of quality / acceptance testing was done? Did anyone, anywhere along the line raise concerns that it would give out bad, potentially illegal advice?

Reed Mideke

reedmideke@mastodon.socialI'd be pretty surprised if there isn't an email chain somewhere with a technical person going "WTF are you even thinking"

Reed Mideke

reedmideke@mastodon.socialBonus #AIIsGoingGreat "Your phone now needs more than 8 GB of RAM to run autocomplete" (and presumably, battery cost somewhat on a par with heavy GPU rendering) https://arstechnica.com/gadgets/2024/03/google-says-the-pixel-8-will-get-its-new-ai-model-but-ram-usage-is-a-concern/

Reed Mideke

reedmideke@mastodon.socialToday's #AIIsGoingGreat (HT @pluralistic https://mastodon.social/@pluralistic@mamot.fr/112196496077034192)

Tired: Typo squatting

Wired: Hallucination squatting

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

Reed Mideke

reedmideke@mastodon.socialSeems like you could put your thumb on scale for which (non existent) libraries show up with #LLM training set poisoning attacks (previously https://mastodon.social/@reedmideke/110850376856613599)

Set up a site that, when it detects known AI scrapers, serves up code or documentation that references a non-existent library, along text associating with whatever kind of code and industry you want to target

OTOH, this would leave much more of trail than just observing bogus ones that show up naturally

Reed Mideke

reedmideke@mastodon.socialIn which the gang discovers Amazon Fresh "Just walk out" checkout was powered by Type II #AI https://gizmodo.com/amazon-reportedly-ditches-just-walk-out-grocery-stores-1851381116

Reed Mideke

reedmideke@mastodon.social"If you think about the major journeys within a [fast food] restaurant that can be AI-powered, we believe it’s endless"

Sir this a fucking Wendy's and people come here to buy a fucking burger, not "take major journeys" https://arstechnica.com/information-technology/2024/04/ai-hype-invades-taco-bell-and-pizza-hut/

Reed Mideke

reedmideke@mastodon.socialAlso uh, can't imagine anything that could possibly go wrong with this: "This enhancement would allow team members to ask the [AI chatbot] app questions like "How should I set this oven temperature?" directly instead of asking a human being"

Reed Mideke

reedmideke@mastodon.socialSome scientists theorized that after over 30 years of continuous development, it was physically impossible to make Adobe Reader worse, but once again, Adobe engineers have found a way

Reed Mideke

reedmideke@mastodon.socialI actually kinda wanted to see it summarize the spurious scholar (https://tylervigen.com/spurious-scholar) paper I was reading when it popped up, but… not enough to log in

Reed Mideke

reedmideke@mastodon.socialToday's #AIIsGoingGreat brought to you by #Ivanti: 'Among the details is the company's promise to improve search abilities in Ivanti's security resources and documentation portal, "powered by AI," and an "Interactive Voice Response system" … also "AI-powered"'

Ah yes, hard to think of any better way to fix a pattern of catastrophic security failures than *checks notes* filtering highly technical, security critical information through a hyper-confident BS machine

Reed Mideke

reedmideke@mastodon.socialToday's #AIIsGoingGreat brought to you by the artist formerly known as Twitter https://mashable.com/article/elon-musk-x-twitter-ai-chatbot-grok-fake-news-trending-explore

Reed Mideke

reedmideke@mastodon.socialHere's a helpful #AI chatbot to assist you with thing that requires domain specific knowledge and has significant real-world consequences for errors… oh, by the way, you'll need to already have that same domain specific knowledge to confirm whether the answers are correct or complete BS

Who thinks this is a good idea?🤔

Reed Mideke

reedmideke@mastodon.socialTexas Education Agency talks a lot about the supposed safeguards in the don't-call-it-#AI "automated scoring engine" but no mention of any testing to determine whether it is fit for purpose (they do mention training it on 3K manually scored questions). Maybe they did and it just didn't get mentioned, but seems like a very good #FOIA target

https://www.texastribune.org/2024/04/09/staar-artificial-intelligence-computer-grading-texas/

Reed Mideke

reedmideke@mastodon.socialOpenAI argues that “factual accuracy in large language models remains an area of active research”

…in the sense that Bigfoot and Nessie remain areas of active research?

https://noyb.eu/en/chatgpt-provides-false-information-about-people-and-openai-cant-correct-it

Reed Mideke

reedmideke@mastodon.socialA+ BLUF from @benjedwards: "Air-gapping GPT-4 model on secure network won't prevent it from potentially making things up"

https://arstechnica.com/information-technology/2024/05/microsoft-launches-ai-chatbot-for-spies/

Reed Mideke

reedmideke@mastodon.socialOh hey, remember #AdVon, the definitely-not-an-ai-company caught publishing #AI dreck in Sports Illustrated? (previously https://mastodon.social/@reedmideke/111486230567895424)

Futurism has another update, and it's a doozy

https://futurism.com/advon-ai-content

Reed Mideke

reedmideke@mastodon.socialGoogle+ comparison is very apt, but also that opening example really hits the problem I've been yelling about since the #LLM hype cycle started: The fundamental mismatch between a system that randomly makes shit up and the uses it's being hyped for https://www.computerworld.com/article/2117752/google-gemini-ai.html

Reed Mideke

reedmideke@mastodon.socialThis, right here: "Erm, right. So you can rely on these systems for information - but then you need to go search somewhere else and see if they’re making something up? In that case, wouldn’t it be faster and more effective to, I don’t know, simply look it up yourself in the first place?"

Reed Mideke

reedmideke@mastodon.socialGoogle's current #AIIsGoingGreat moment really checks all the bad #AI boxes. Starting with the dismissive "examples we've seen are generally very uncommon queries and aren’t representative of most people’s experiences" - Sure *sometimes* the answers are complete BS and possibly dangerous, but what about the times they aren't? Checkmate, Luddites!

Reed Mideke

reedmideke@mastodon.socialAnd as always, they insist they are fixing it: "We conducted extensive testing before launching this new experience and will use these isolated examples as we continue to refine our systems overall" - with *zero* indication they have a technical or even theoretical path to solving the general problem that #LLMs don't have any concept of truth

Reed Mideke

reedmideke@mastodon.socialAnd then, the whole thing is made worse by positioning it as a replacement for search, in the top spot with google branding. The "eat rocks" article ranks high in the regular organic search results the same query, but users have a lot more clues that it was a joke

Reed Mideke

reedmideke@mastodon.socialWhy am I so sure #AI companies have no serious technical or theoretical solution to the underlying problem that #LLMs have no concept of truth? The fact their approach so far is manually band-aiding results that go viral or put them in legal jeopardy is a pretty big hint! https://www.theverge.com/2024/5/24/24164119/google-ai-overview-mistakes-search-race-openai

Reed Mideke

reedmideke@mastodon.social👉 "Gary Marcus, an AI expert and an emeritus professor of neural science at New York University, told The Verge that a lot of AI companies are “selling dreams” that this tech will go from 80 percent correct to 100 percent. Achieving the initial 80 percent is relatively straightforward since it involves approximating a large amount of human data, Marcus said, but the final 20 percent is extremely challenging. In fact, Marcus thinks that last 20 percent might be the hardest thing of all"

Reed Mideke

reedmideke@mastodon.socialWhat they're doing now seems like selling a calculator, and when a screenshot of it saying 2+2=5 goes viral on social media, they add a statement like "if x=2 and y=2 return 4" at the top of the program and say "see, we fixed it!"

Reed Mideke

reedmideke@mastodon.socialStraight from Google CEO Sundar Pichai's mouth: 'these "hallucinations" are an "inherent feature" of AI large language models (LLM), which is what drives AI Overviews, and this feature "is still an unsolved problem"'

but they're gonna keep band-aiding until it's good, promise! ""Are we making progress? Yes, we are … We have definitely made progress when we look at metrics on factuality year on year. We are all making it better, but it’s not solved""

Reed Mideke

reedmideke@mastodon.socialJust occurred to me Mitchell and Webb predicted our current pizza-gluing, gasoline spaghetti #AIIsGoingGreat moment 16 years ago https://www.youtube.com/watch?v=B_m17HK97M8

Reed Mideke

reedmideke@mastodon.socialToday's #AIIsGoingGreat - Meta's chatbot helpfully "confirms" a scammers number is a legitimate facebook support number

(of course, #LLMs just predict likely sequences of text, and for a question like this, "yes" is one of the high probability answers. There's no indication any of the companies hyping LLMs as a source of information have any serious solution for this kind of thing)

https://www.cbc.ca/news/canada/manitoba/facebook-customer-support-scam-1.7219581

Reed Mideke

reedmideke@mastodon.socialKyle Orland hammers on my off-repeated complaint (https://mastodon.social/@reedmideke/110063208987793683) that filtering your information through an #LLM *removes* useful context: "When Google's AI Overview synthesizes a new summary of the web's top results, on the other hand, all of this personal reliability and relevance context is lost. The Reddit troll gets mixed in with the serious cooking expert"

https://arstechnica.com/ai/2024/06/googles-ai-overviews-misunderstand-why-people-use-google/

Reed Mideke

reedmideke@mastodon.socialOn the same note, today's #AIIsGoingGreat courtesy of @ppossej, observing Microsoft copilot helpfully "summarizing" a phishing email. Even leaving aside obvious problem here, what exactly is supposed to be the value having an already short email filtered through spicy autocomplete? https://mastodon.social/@ppossej@aus.social/112555512126646188

Reed Mideke

reedmideke@mastodon.socialI initially dismissed today's #AIIsGoingGreat (HT @zhuowei) as a joke, but no* : "aiBIOS leverages an LLM to integrate AI capabilities into Insyde Software’s flagship firmware solution, InsydeH2O® UEFI BIOS. It provides the ability to interpret the PC user’s request, analyze their specific hardware, and parse through the LLM’s extensive knowledge base of BIOS and computer terminology to make the appropriate changes to the BIOS Setup"

* not an intentional one, anyway

https://www.insyde.com/press_news/press-releases/insyde%C2%AE-software-brings-higher-intelligence-pcs-aibios%E2%84%A2-technology-be

Reed Mideke

reedmideke@mastodon.socialToday's #AIIsGoingGreat features Zoom CEO Eric Yuan blazed out of his mind on his own supply: "Today for this session, ideally, I do not need to join. I can send a digital version of myself to join so I can go to the beach. Or I do not need to check my emails; the digital version of myself can read most of the emails. Maybe one or two emails will tell me, “Eric, it’s hard for the digital version to reply. Can you do that?”"

Reed Mideke

reedmideke@mastodon.social"I truly hate reading email every morning, and ideally, my AI version for myself reads most of the emails. We are not there yet"

OK, points for recognizing we're "not there yet", in roughly the same sense the legend of Icarus foresaw intercontinental jet travel but was "not there yet"

Reed Mideke

reedmideke@mastodon.socialActually interesting thing in that Eric Yuan interview "every day, I personally spend a lot of time on talking with our customer’s prospects. Guess what? First question they all always ask me now is “What’s your AI strategy? What do you do to embrace AI?…”"

- even if exaggerated, seems like a good indicator of how deeply C-suite types have bought into the hype, which in turn means they all need an "AI strategy" no matter how ludicrous

Reed Mideke

reedmideke@mastodon.socialand the thing is, in terms of their personal incentives, they're probably not wrong. The analysts and shareholders and trade press want the new shiny thing, and if their current business gets caught on the wrong side of the bubble, they keep whatever bonuses they got in the interim and it probably won't hurt their future career prospects much

Reed Mideke

reedmideke@mastodon.socialBonus #AIIsGoingGreat from NYT with a deep look at (now defunct) skeevy news out BNN Breaking: "employees were asked to put articles from other news sites into the [#LLM] tool so that it could paraphrase them, and then to manually “validate” the results by checking them for errors… Employees did not want their bylines on stories generated purely by A.I., but Mr. Chahal insisted on this. Soon, the tool randomly assigned their names to stories"

https://www.nytimes.com/2024/06/06/technology/bnn-breaking-ai-generated-news.html?u2g=i&unlocked_article_code=1.x00.zn0r.s2tFDDFWR0fo&smid=url-share

#GiftArticle #GiftLink #BNN

Reed Mideke

reedmideke@mastodon.social#BNN founder Gurbaksh Chahal seems to be an all-around charming fellow "In 2013, he attacked his girlfriend at the time, and was accused of hitting and kicking her more than 100 times, generating significant media attention because it was recorded by a video camera he had installed in the bedroom … After an arrest involving another domestic violence incident with a different partner in 2016, he served six months in jail"

Reed Mideke

reedmideke@mastodon.socialSome might argue that making the #AI acronym do double duty with "Apple Intelligence" is a recipe for confusion, but after all the hype I find it refreshingly honest to position the product as "about as smart as a piece of fruit"

Reed Mideke

reedmideke@mastodon.socialThat "#ChatGPT is bullshit" paper I boosted earlier does a nice job of laying out why the "hallucination" terminology is harmful "what occurs in the case of an #LLM delivering false utterances is not an unusual or deviant form of the process it usually goes through… The very same process occurs when its outputs happen to be true"

https://link.springer.com/article/10.1007/s10676-024-09775-5

Reed Mideke

reedmideke@mastodon.socialThe authors rightly object to "confabulation" for similar reasons "This term also suggests that there is something exceptional occurring when the LLM makes a false utterance, i.e., that in these occasions - and only these occasions - it “fills in” a gap in memory with something false. This too is misleading. Even when the ChatGPT does give us correct answers, its process is one of predicting the next token"

Reed Mideke

reedmideke@mastodon.socialThey are far from the first to make the connection between #LLMs and Frankfurtian bullshit, but humor aside, they do make a compelling case that the terminology matters https://link.springer.com/article/10.1007/s10676-024-09775-5#Sec12

Reed Mideke

reedmideke@mastodon.social"Calling their mistakes ‘hallucinations’ isn’t harmless: it lends itself to the confusion that the machines are in some way misperceiving but are nonetheless trying to convey something that they believe or have perceived. This, as we’ve argued, is the wrong metaphor. The machines are not trying to communicate something they believe or perceive. Their inaccuracy is not due to misperception or hallucination … they are not trying to convey information at all. They are bullshitting"

Alpha Chen

alphaI still think “confabulation” would be a better term for this than “hallucination”.

RE: https://mastodon.social/@reedmideke/112601424339903510

@alpha I've been calling them fabrications when you agree with them, but hallucinations when you don't