Posts

1919Following

HiddenFollowers

Hiddentoo many names

mikeymikey@hachyderm.io"The code stack is extremely brittle for no reason" has real big "I dunno, it was like that when I got here" stories from a 7 old energy

Mike Conley (:mconley) ⚙️

mconley@fosstodon.orgThis is a pretty great document.

"How to Help Someone Use a Computer" by Phil Agre.

Anil Dash

anildash@me.dmHas a single one of the countless corporate cheerleaders who said NYC should gift Amazon billions for HQ2 admitted they were wrong? Anyone? Or are they all just ignoring that things played out exactly as rational people knew they would? Let’s make a list of folks who promised it was definitely gonna help whatever city paid the bribe?

Neil Brown

neil@mastodon.neilzone.co.ukWhen I am a dictator, it will be the law that any company which has a call-holding announcement of "we are experiencing a higher number of calls than usual" must publish ongoing and historic figures justifying this statement, and explaining what they intend to do about it.

Colin

csgordon@types.plShow content

I keep seeing a lot of stuff floating around saying things like "The problem isn’t that kids are using AI to write homework assignments. The problem is we’re assigning kids problems AI can do" (seen this morning) and I'm sorry but I find this take incredibly naive. I'm no fan of boring assignments where students do some activity just because and nobody seriously evaluates them or gives them feedback. That's a real problem, but not one this catchy suggestion addresses.

We still teach students addition, subtraction, etc., despite having calculators for decades. Why? Because if you only ever punch numbers into a calculator you don't actually understand numbers! We still teach students how to implement linked lists not because we need more linked list implementations, but because it's a stepping stone to understanding data structures in general. We don't ask students to write essays because we care about having more text sequences that take the form of an essay. We ask them to write essays so they can practice organizing their thoughts and stating their thoughts, opinions, and arguments clearly in a form that other humans can understand (to practice clear communication!).

Replace "AI" in the quote above with "online outlets that do your homework for a price." Or replace it with "parents." We ask for these things not because the outputs themselves are generally important, but because we care about the learning outcomes that arise from a student doing them; learning how to produce these outputs is how we teach students to think critically, or understand numbers or data structures. Yes this can (and often is) done poorly, and that needs fixing, 100%. But asking students to do things others already know how to do is a critical pedagogical tool for building understanding.

Nevermind that these lines of argument give the "AI" too much credit. ChatGPT can't actually do math, for example, it just memorized millions of examples of doing thousands of math problems. It's why it screws up if you ask it to work with really large numbers: it hasn't seen those in its training data so you get the output of smoothing an uneven probability distribution.

EDIT: I want to clarify something important: I'm specifically arguing against the idea that just because ChatGPT-like systems can "do" an assignment we should not use it for teaching anymore, which is some nonsense I've been hearing a lot lately. Because I wasn't clear, some people have read this post as implicitly defending business as usual. That's not what I mean. The examples I gave of assignments are things that *can* be used for excellent learning, but any style of assignment can be given or graded thoughtlessly in a way that leads to no learning at all, and I don't care to preserve those uses. So please do reevaluate assignments and toss ones that don't work; just please toss the ones that actually don't lead to learning (plenty of valid reasons to do this even before ChatGPT), and keep the ones that do even if a few students might use automated systems for them. We already have enough trouble with instructors more concerned with cheating detection than with learning outcomes.

Allen Downey

allendowney@fosstodon.orgCreate techno-linguistic chaos in three easy steps:

1) Choose two words that are synonyms in normal use (bonus if they are phonetically similar).

2) Give them technical definitions that are subtly different.

3) Start correcting people when they use your new words wrong.

* sequence and series

* efficacy and effectiveness

* sensitivity and specificity

* confidence and credibility

* probability and likelihood

* energy and power

Enjoy centuries of ensuing confusion!

mtsw

mtsw@mastodon.socialWalgreens is restricting sales of abortion pills because they (probably correctly) believe they're more likely to face consequences for defying conservatives than for defying liberals, despite liberals official control of the Presidency and Senate and nationwide popular majorities. Either use power yourself or watch your enemies use power instead! There's no alternative!

Timnit Gebru (she/her).

timnitGebru@dair-community.socialNot "AI" but SALAMI. H/t @emilymbender

by @quinta

"Let’s forget the term AI. Let’s call them Systematic Approaches to Learning Algorithms and Machine Inferences (SALAMI)."

jonathan w. y. gray 🐨

jwyg@post.lurk.orgNew guide to "Finding Undocumented APIs" by @Leonyin at @themarkup – looks useful for internet studies researchers as well as digital reporters!

https://inspectelement.org/apis

Just added to this resource for our data journalism students at @kingsdh: https://github.com/jwyg/awesome-data-journalism/

Kevin Dorse

kdorse@ottawa.placeThe cry that killed Web 2.0 was "Don't read the comments!"

Then to show what we'd learned for an encore we created platforms that were nothing but comments.

Edd Barrett

ebarrett@mastodon.socialMost absurd bug I've reported in some time:

https://github.com/jellyfin/jellyfin/issues/9406

Alpha Chen

alphaA lovely demonstration of using StringScanner for parsing. One of the less-appreciated libraries in Ruby’s stdlib, but one I wind up using surprisingly often.

RE: https://mastodon.social/users/tenderlove/statuses/109961965253467776

Justin Searls

searls@mastodon.sociallol when you ask DALL•E for a transparent background it will just rasterize the photoshop transparent grid onto a JPG.

neat

Alpha Chen

alphaThis paper makes me think of some of the tradeoffs between writing and reading code, and abstraction: https://www.sciencedirect.com/science/article/abs/pii/S0010027711002496

… we argue that ambiguity can be understood by the trade-off between two communicative pressures which are inherent to any communicative system: clarity and ease. A clear communication system is one in which the intended meaning can be recovered from the signal with high probability. An easy communication system is one which signals are efficiently produced, communicated, and processed.

Alpha Chen

alphaInteresting findings regarding breaks in experiments - I wonder what can be learned here and changed about breaks in long meetings?

Alpha Chen

alphaGot to introduce new coworkers to my (not actually) radical idea that more companies should hire librarians.

Austin Kocher, PhD 🌎

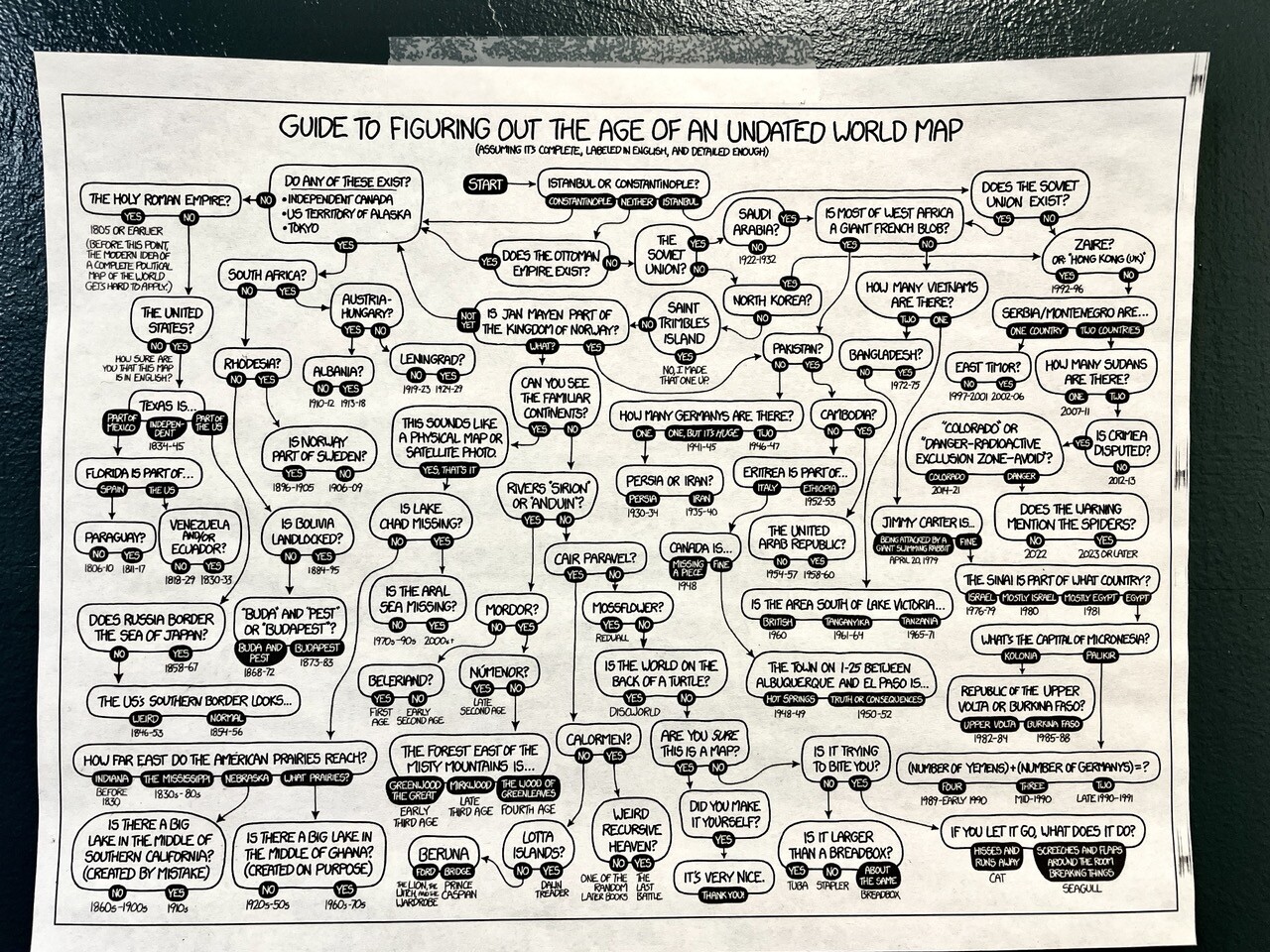

austinkocher@mastodon.socialI found this taped to the door of the geography department at GWU today and it brought me so much joy. A guide to figuring out when a map was made based on features. Zoom in. It’s amazing.

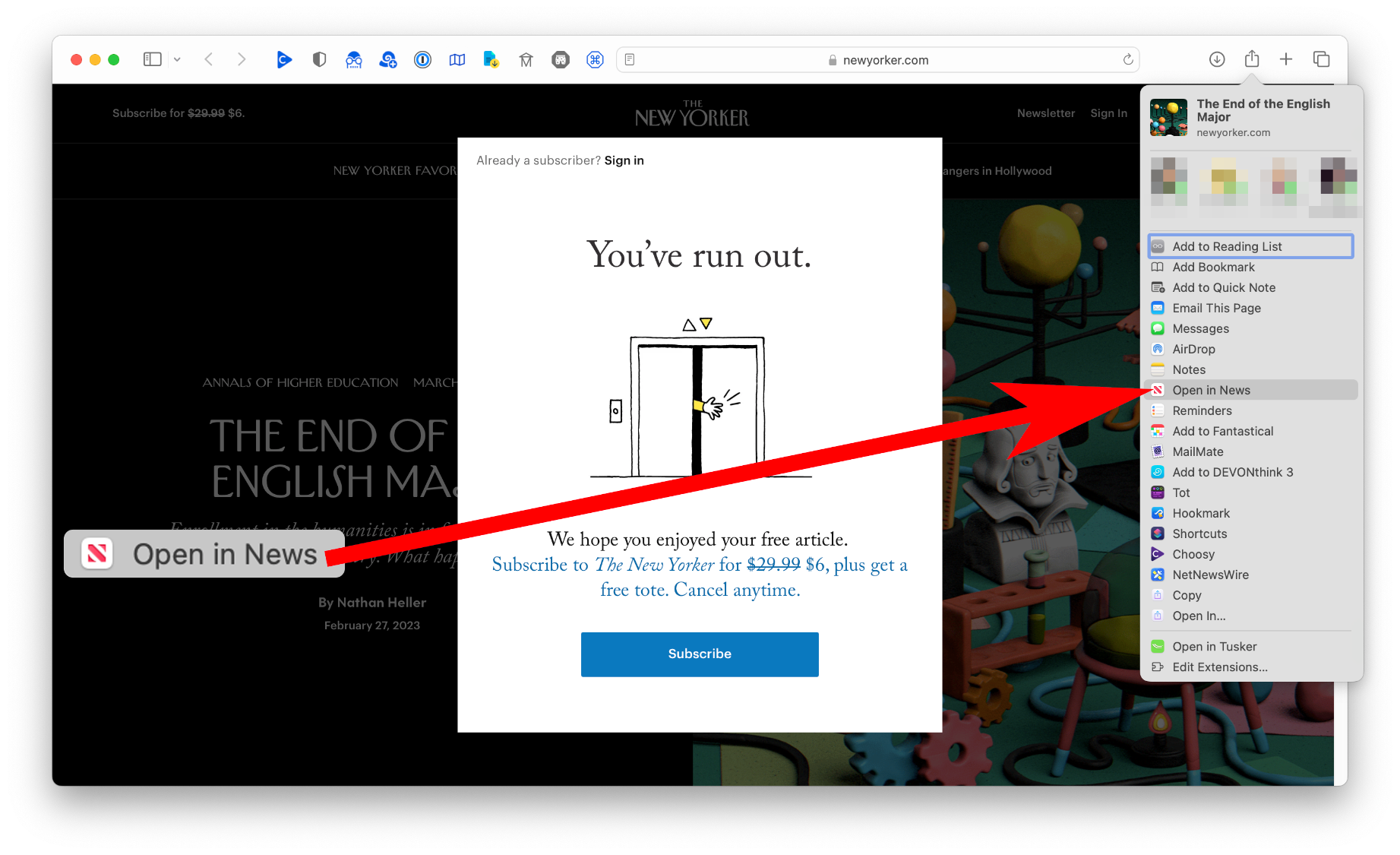

Ethan J. A. Schoonover

ethanschoonover@mastodon.socialJust a reminder that if you hit a paywall on the web and you subscribe to Apple News+ (we do via Apple One), you can use the Share sheet to open it there instead. If it is a publication carried by News+, you are past the paywall (and still supporting the publication).